Ready to get started?

Select the smart device that’s best for them, so they can start living their healthiest, happiest life.

By clicking Checkout you are agreeing to our Terms of Service and Privacy Policy

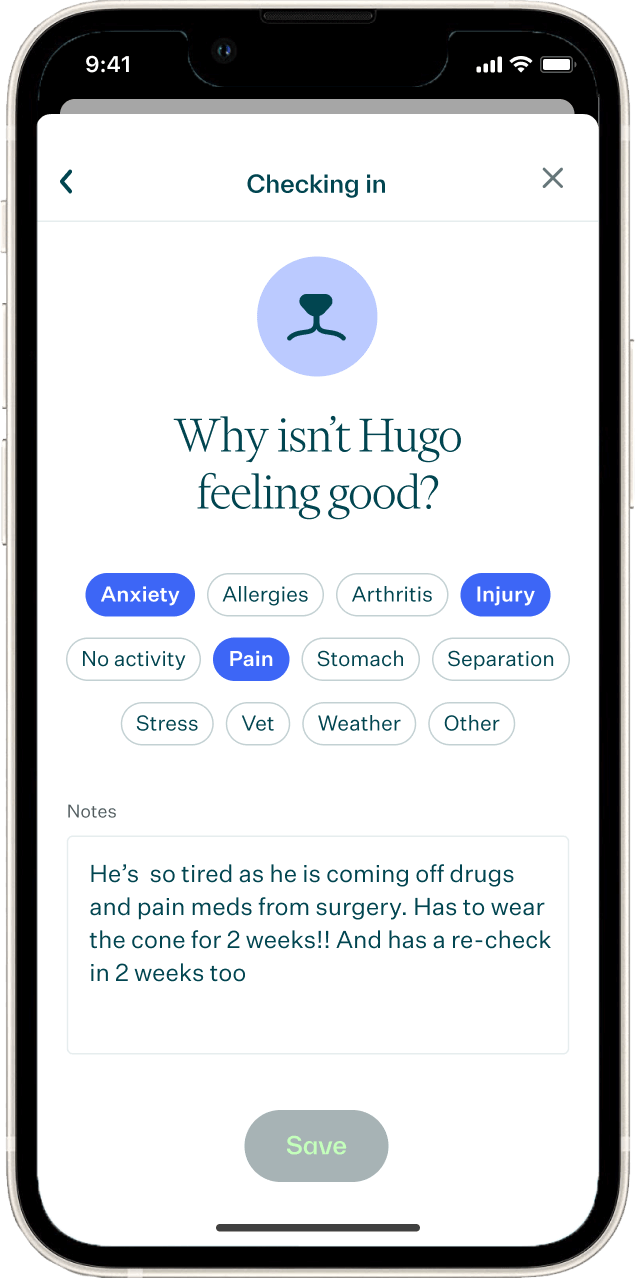

Help your pup through allergy season

Allergies are in full bloom, but that doesn't mean your pet has to suffer. A Whistle smart device can help you track licking and scratching so you can detect signs of allergies faster.

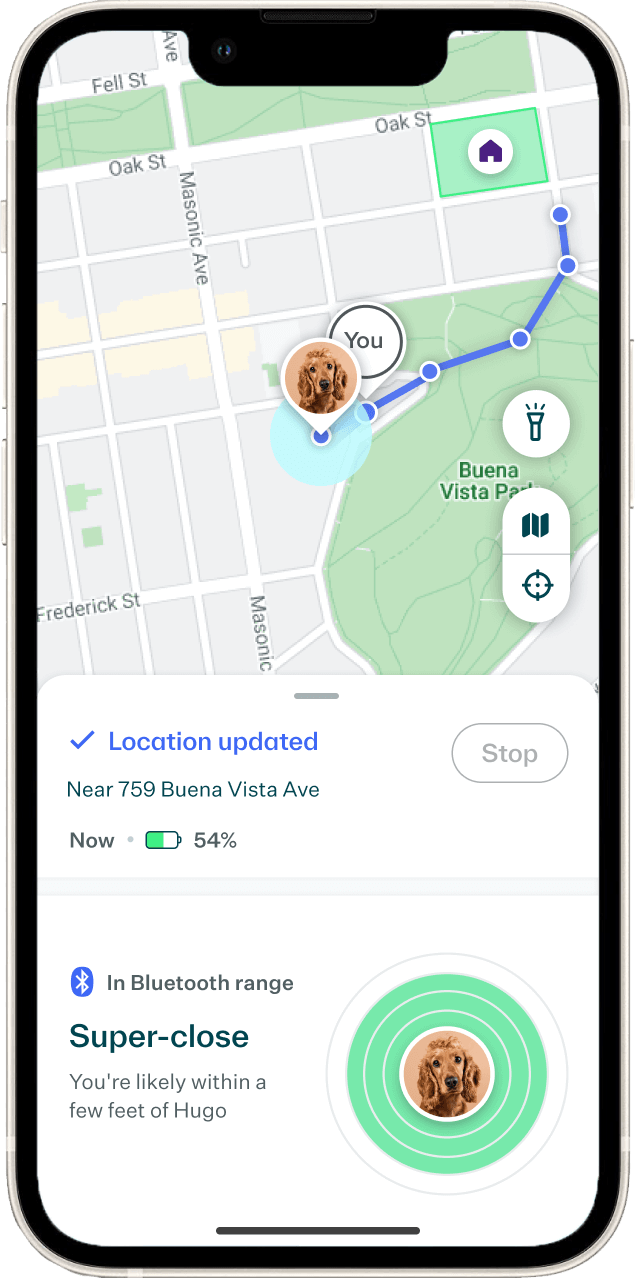

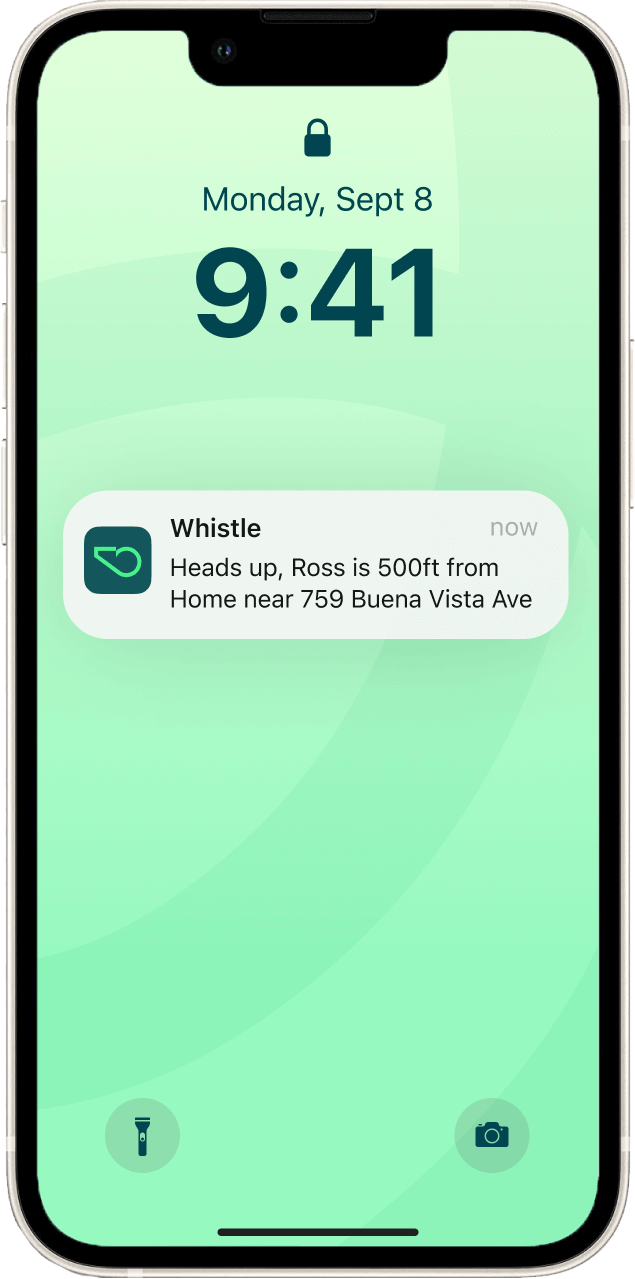

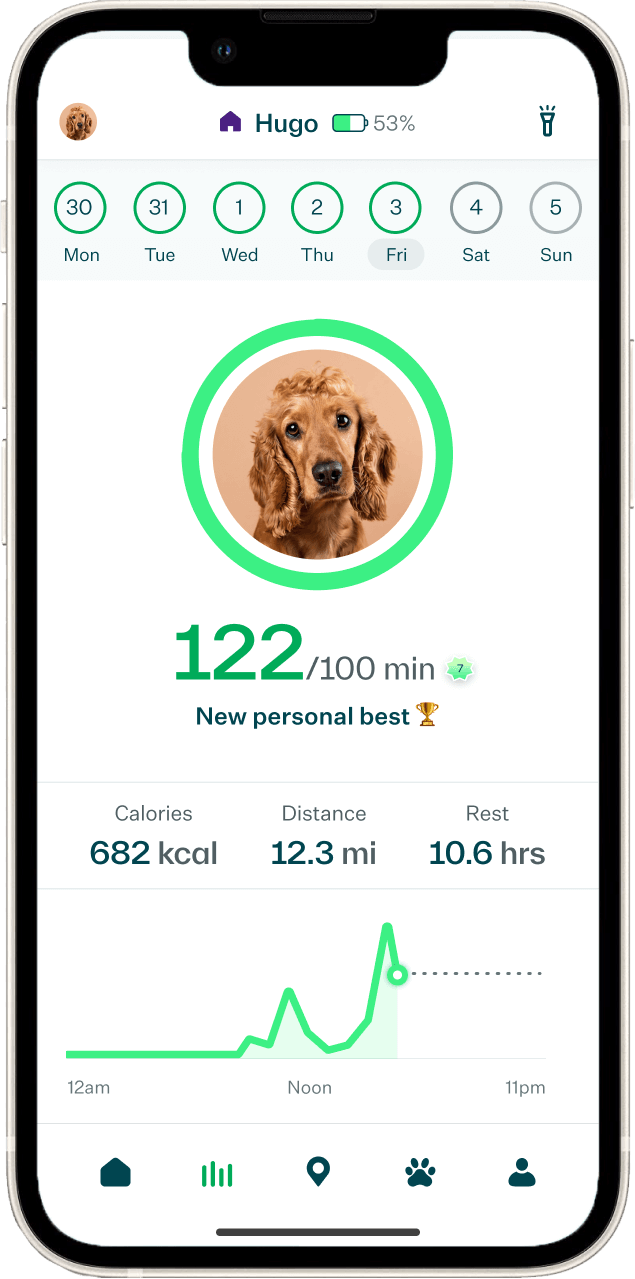

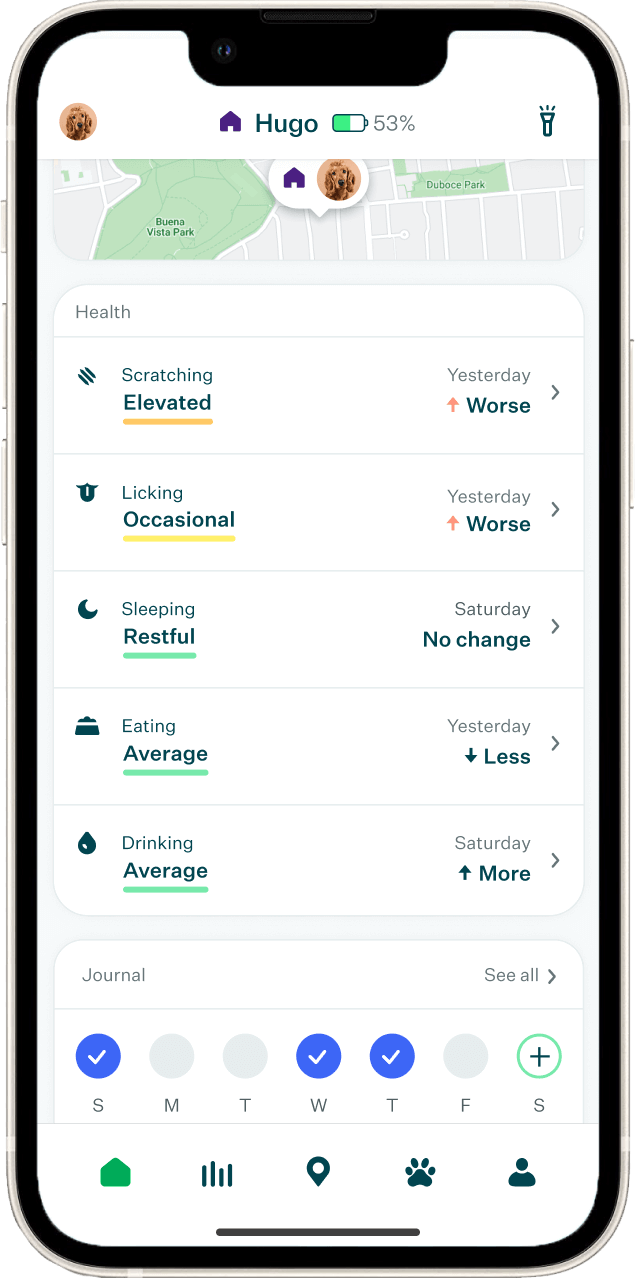

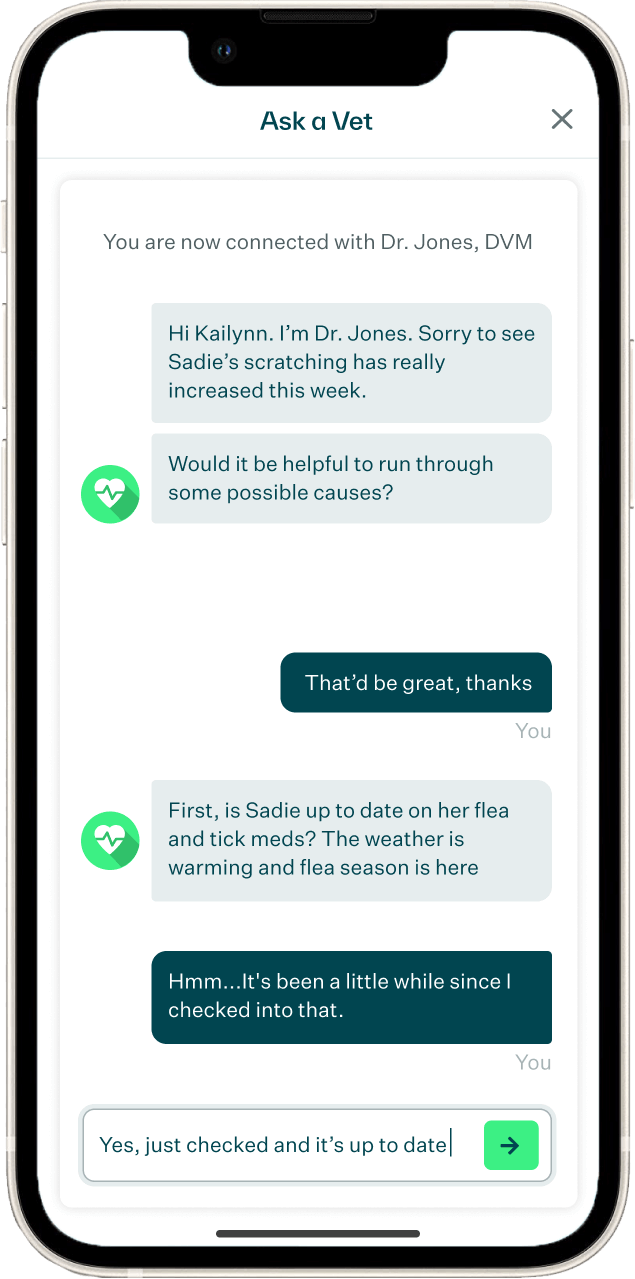

Our pioneering tech translates billions of data points into easy-to-understand insights—all available in the connected app. So you can manage their health, track their location, and deliver a whole new level of care.

of pet parents don’t realize their pet is overweight.

of pets felt more comfortable after managing skin and coat issues with Whistle.

$69

Get the full picture of your pet’s health by tracking their behavior, activity, and wellness.

$129

This GPS smart tracker includes all the features of Whistle Health, plus location updates and escape alerts.

$149

Get all the features of Whistle Go Explore 2.0, plus an integrated collar and interchangeable batteries for 24/7 tracking.

So far, we’ve tracked…

A world of research

powers every

Whistle device

Our AI is powered by the Pet Insight Project, an award-winning team of vets and data scientists.

The experts on the Pet Insight team have studied the health data of 100,000+ dogs, paired with medical records from Banfield Pet Hospital to create the patented AI that precisely tracks and translates pet health.

Explore the Research

Select the smart device that’s best for them, so they can start living their healthiest, happiest life.